Mobile Ad Measurement without IDFAs (in a iOS 14 world)

In June 2020 Apple shocked the mobile marketing world with the announcement of the new App Tracking Transparency Framework, which originally was planned to be rolled with iOS 14 in September 2020, but currently is postponed to 2021. As part of this framework, apps need to ask users for permission to access their IDFA.

Experts estimate opt-in rates to be between 10%-20%, which means that the vast majority of iOS users are not trackable. This has a drastic impact on mobile ad marketing and measurement thereof. Mobile app retargeting on iOS 14 essentially isn’t possible at a scale that makes it viable.

A good indication of how much of an impact the required opt-in for IDFA tracking has is Facebook’s announcement to abandon the IDFA and their Facebook Audience Network.

Mobile Measurement Partners (MMP) such as Adjust or Appsfyler will only be able to provide the tracking and attribution data for a small minority of iOS 14 users. At Adtriba we use the user journey tracking data provided by these MMPs for mobile app ad attribution, as described here. This will still be possible for Android users, but not for iOS 14 users at a level that it makes sense from a measurement perspective.

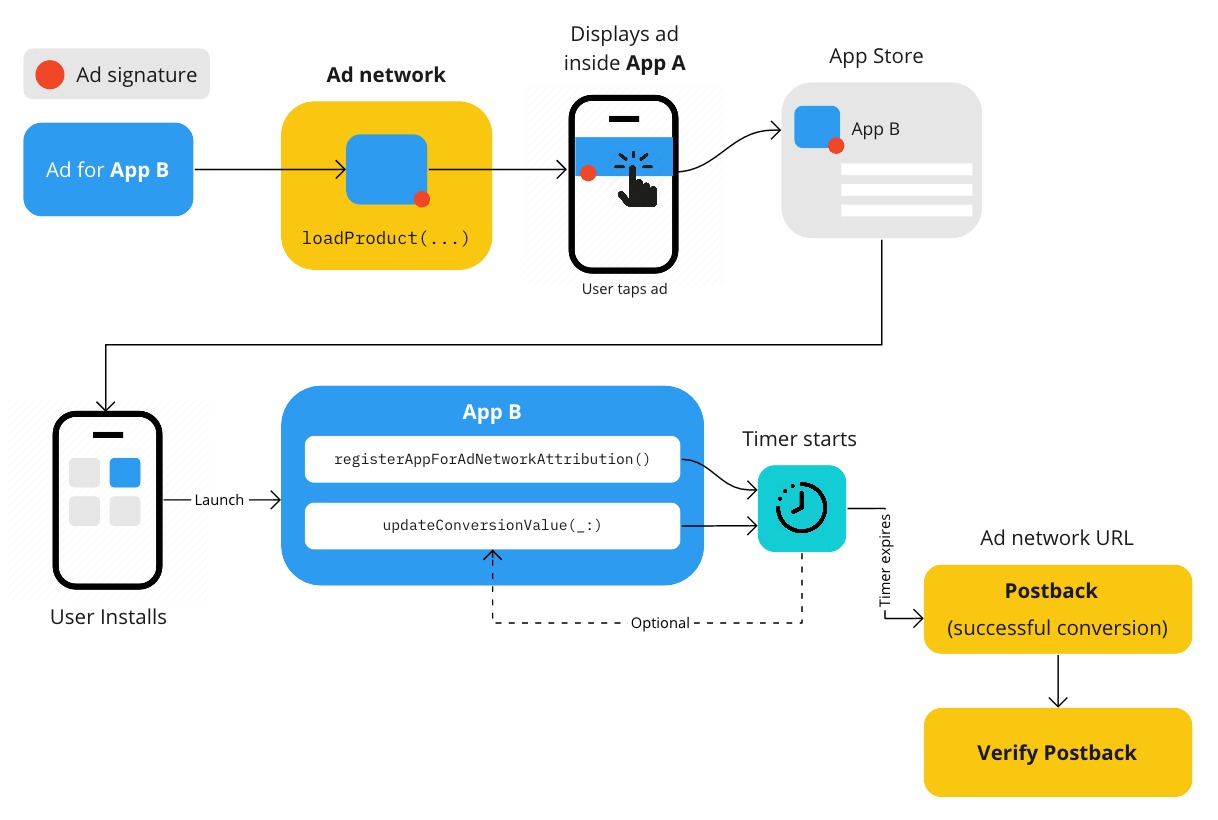

Through Apple’s SKAdNetwork it will still be possible to somehow link installs and conversions to campaigns and marketing channels in a rather complex and non-straightforward fashion, but also not on the user level. Here’s a good and technical description of how the SKAdnetwork mechanism actually works.

SKAdNetwork Sequence of Events ( Source)

SKAdNetwork mainly aims at providing ad networks with limited information required to optimize their targeting mechanisms and allows for some basic attribution. One of the major challenges is that it doesn’t allow for linking marketing campaign clicks directly to conversions if the conversion happened 24 hours after the install (or marketing touchpoints). So for example, if a user clicks an install ad, installs the app, leaves the app and then only comes back more than 24 hours after the install to convert, the ad can’t be linked to the install ad. Additionally, ad view-through tracking won’t be possible with the SKAdNetwork (see also here for additional limitation of the SKAdNetwork).

MMPs integrate the SKAdNetwork concept into their solution, but just looking at the input data available through the SKAdNetwork makes it obvious that accurate user-level attribution for iOS is a thing of the past. Some question whether MMPs will still play a role in the mobile marketing space in the future if accurate attribution isn’t possible and with Google likely to follow suit in restricting access to mobile user-level data.

Fingerprinting tracking is seen by a few MMPs as part of the solution, but this is definitely not what Apple had in mind when it announced restricting access to the IDFA. It’s highly likely that Apple will monitor closely and will be punishing apps using such tracking technology, which is also on a collision course with GDPR and CCPA. So app marketers should be carefully considering using these kinds of alternatives to legit user journey tracking.

Solution

Despite these drastic changes, mobile advertisers still need a way to assess and measure the performance of their marketing channels and investments as accurately as possible. Concretely, measurement and optimization should be possible on the following levels:

- Operational: which creative works best, what’s the CVR of different text ads, keyword level

- Tactical: test 10000 EUR on a new channel (e.g. Snapchat or TikTok), monthly budget planning, which channels generate the most installs

- Strategical: Planning budget allocation for the next fiscal year, how much on branding vs. performance, Offline, TV, Social / FB vs. Adwords

Marketing Mix Modeling

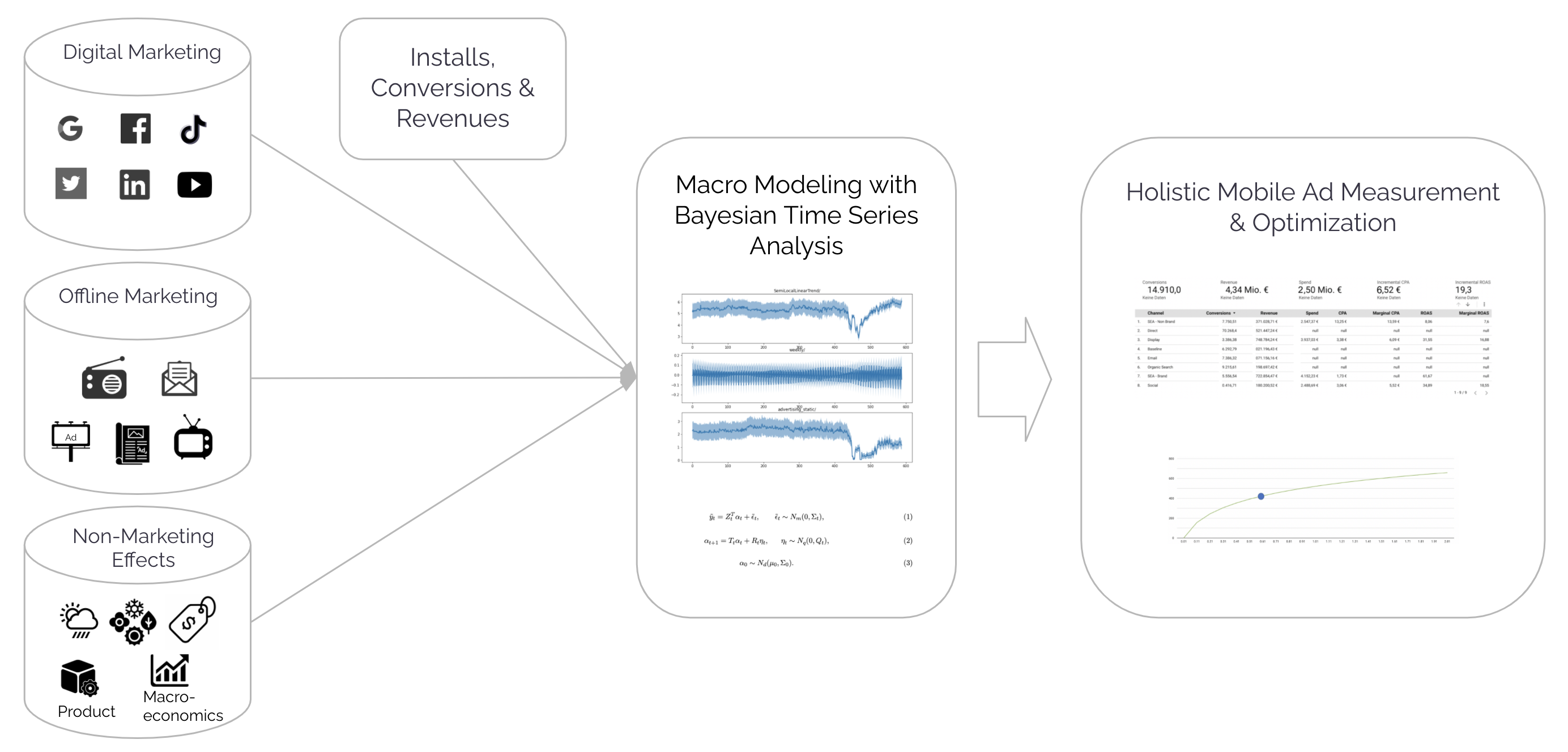

For tactical and strategic optimization of mobile marketing activities, marketing mix modeling (MMM) can be a suitable solution. For the mobile measurement case, MMM includes statistical analysis of time series data from marketing activities (e.g. daily spend on Instagram app install ads or daily spend on mobile app retargeting ads) to understand their impact on installs and conversions. In addition to the marketing data, non-marketing effects can be integrated as well, such as seasonality, trend or product changes. This type of macro modeling implicitly accounts for cross-device effects, which is another advantage over user-level journey modeling.

Applying macro modeling for holistic mobile ad measurement.

There’s a lot of talk about marketing mix modeling (MMM) or modeling of aggregated data as the solution to all challenges arising from the restriction of user journey tracking. It’s important to understand the possibilities but also the limitations of MMM. Since MMM works on aggregated data which doesn’t involve any personal data, e.g. number of clicks or views of a particular Instagram ad per day, it’s not impacted by any of the restrictions such as the IDFA deprecation, ITP or GDPR. Some of the challenges with aggregated data modeling include the lack of sufficient data points, e.g. due to the fact that advertisers might not have a long enough (recorded) history of marketing activities.

Secondly, if the variance in the input marketing time series data is too low, it’s hard to model any impact on conversions and revenues. For example, if an advertiser spends 5000 EUR per day on Instagram ads without any variation it’d be difficult to model any impact on sales.

Thirdly, the impact of small changes in ad creatives as well as small tests on new channels might not be detectable through marketing mix modeling techniques. For example, if the monthly ad spend is 500.000 EUR a small test of 2.000 EUR on TikTok (0.4%) will likely go undetected by any analysis on aggregated data. That means that part of the marketing managers job is to also think of budget allocations and tests in a high enough range so that marketing mix modeling can actually pick up the impact. In the above example it might result in a test that involves spending 20.000 EUR on the TikTok test instead.

So mobile marketers need to be aware of these characteristics of MMM in order to design the campaigns and allocate their budgets accordingly. Plainly spoken, you can’t apply MMM if you only want to test small budget shifts and their effect.

For one of our mobility clients, FREE NOW, we applied the macro data modeling approach to compare it to their existing install attribution via an MMP. Within the POC, the model generated had a MAPE of 9% on the hold-out/test set and allows evaluating the effectiveness of the different marketing activities on the install numbers. FREE NOW regularly receives install attribution numbers from their MMP, but those are based on a last click attribution. The aggregated data modeling approach is more holistic since it includes all digital paid marketing campaign touchpoints and all also allows including offline campaigns. For example, if a campaign tends to have an effective assisting impact on installs, but isn’t prone to be the last click before the install, it will be undervalued through the MMP’s last click perspective. In this case, the MMM approach delivers a more realistic assessment through accounting the assist performance.

The drawback is that the measurement results of the macro modeling aren’t per se available on the same level of granularity as the information delivered by the MMP. MMPs are able to deliver data on the most detailed level, e.g. on a Google click-id level, while macro modeling might only occur on a channel & campaign type level. There are ways to further break down these measurement insights, but this might come at the cost of accuracy.

As a next step, the model’s accuracy will be further improved and other conversion events and offline marketing campaigns will be included in the MMM for FREE NOW. This macro modeling approach is a holistic and cross-platform orientated approach to measure mobile app ad effectiveness and one that works reliably in a post IDFA world.

The results of the MMM are being validated by comparing them to the up to now still available mobile app tracking data from Android devices. Also, comparing the macro measurement insights to results from experiments and AB-Tests is another good way for further validation.

Experiments & AB-Testing

The gold standard to understand actual incrementality of marketing activities are randomized controlled trials (RCTs). They facilitate learning about the causal relationship between marketing activities and desired outcomes, such as conversions. There are ways to incorporate causal inference into the macro modeling approach (e.g. Granger causality), but RCTs are the more robust way to actually prove causality.

Why not then run experiments and RCTs all the time for measuring marketing performance? There are a couple of reasons why experiments are not a feasible option for continuously measuring marketing performance. First of all, to produce reliable results a lot of discipline and scientific rigor is required, which in a business context is hard if not impossible to achieve. For example, experiments’ duration need to be fixed before the test is started and only once the respective date is reached can the test be evaluated. In a business context, people tend to look into the results on a daily basis and end the test once the desired outcome is achieved. This seriously undermines the validity of the results. Testing different campaigns at the same time and overlapping test and control groups (FB campaign and Google Ads test at the time in the same customer group) will cause additional problems, for example, due to the multi comparison problem and alpha inflation. Opportunity costs also need to be considered when setting up experiments: if a campaign that is supposed to be tested is successful (which most likely is the assumption before the start of the experiment) there are opportunity costs involved for not showing the respective ads to users in the control group. If experiments are conducted correctly, they only deliver a one-time snapshot view on how a marketing activity performs. Marketing campaigns effectiveness can vary quite dramatically over time, so the results from an experiment need to be understood as a snapshot which helps to understand the causal structure within the marketing mix. But they are rather unapt as the basis for ongoing marketing performance reporting.

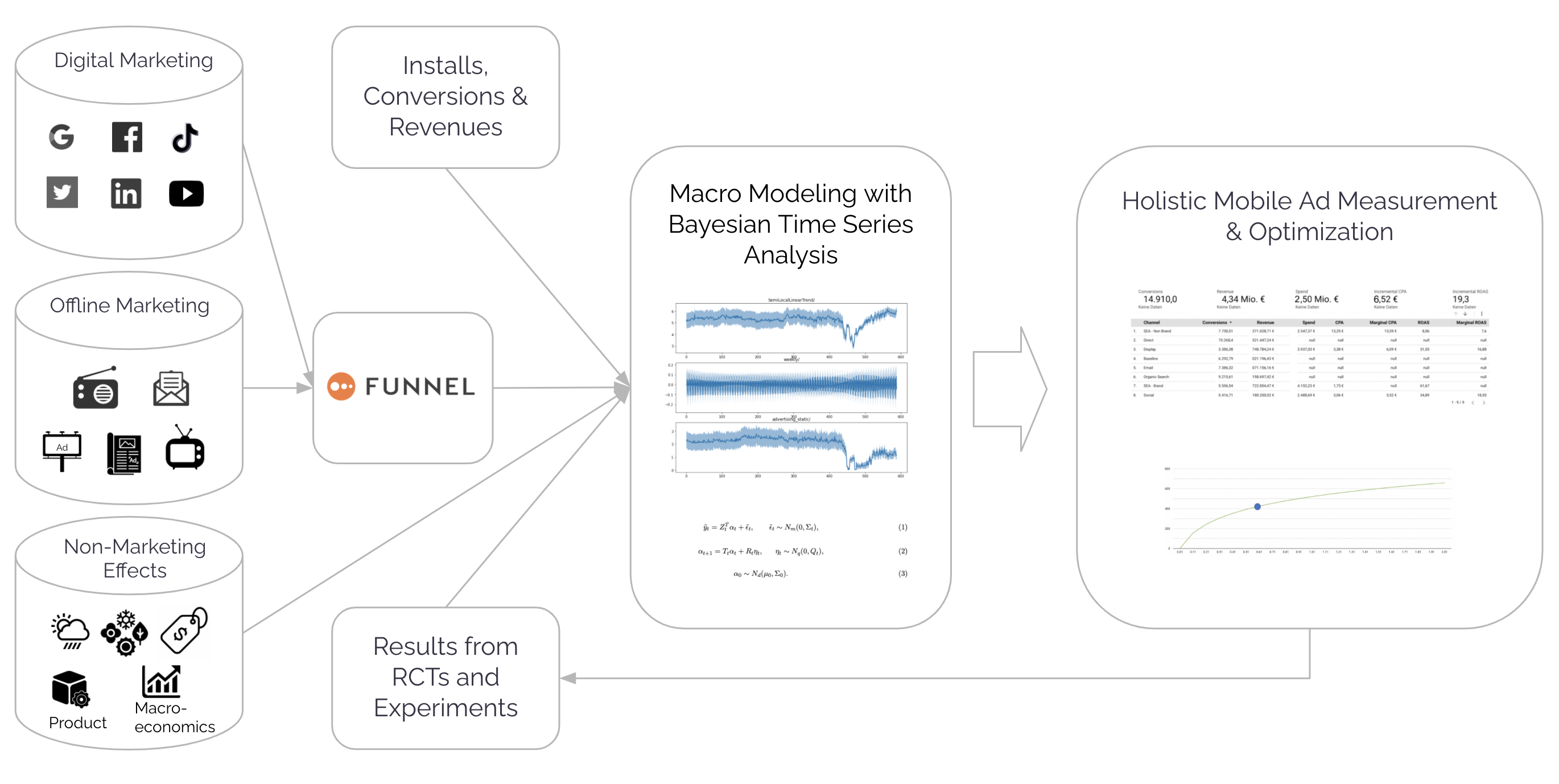

Despite the limitations of experiments and RCTs for marketing measurement, there are obviously some good reasons to apply experiments. Validating results of measurement approaches, such as from MMM or data-driven multitouch attribution, is one of the best use cases for such experiments. After all, it’s important to regularly check the validity and quality of marketing measurement systems and RCTs are the best way to do it. Also, existing results from experiments, if produced under the discussed requirements with the necessary rigor, can be used as valuable input data into Bayesian macro modeling in the form of priors. Priors can be seen as prior knowledge or facts about the effects of interventions, such as marketing activities, and their interdependencies. For example, such fact could be that TV ads can cause a rise in clicks on branded search ads but not the other way around.

Adtriba’s Mobile Ad Measurement System including integration of RCT results and marketing data consolidation through Funnel.

Summary

Ideally these different methodologies to measure marketing performance should be combined to achieve reliable and robust measurement of marketing activities in the post-IDFA iOS 14 world.

The basis for marketing measurement for mobile app campaigns are macro-level measurement models based on aggregated marketing data. This data mostly consists of cost, click and view data per marketing channel/campaign and per day and should be integrated through data integration systems, such as Funnel. This way costly and error-prone manual handling of CSV or Excel files can be avoided. Adtriba has partnered with Funnel for automated integration of aggregated marketing data.

The results of the MMM, obviously differentiated by iOS vs. Android, are being validated by comparing them to the up to now still available mobile app tracking data from Android devices. Also, user journeys from opt-in iOS 14 users can be compared to the MMM results, albeit the opt-in users most likely will behave differently than the users who haven’t opt-in for IDFA tracking, so they might not be representative. Extrapolating from these users to all iOS 14 users, therefore, shouldn’t be considered.

RCTs, AB-Tests and experiments are other valuable sources of information to validate the results from macro-level modeling. Also recent results from experiments could be used as inputs for the MMM, e.g. in the form of priors. Experiments should be conducted regularly and with the necessary scientific rigor and discipline to maximize the validity and robustness of the results.

For granular and operational optimization of ad campaigns, e.g. optimizing CVR and creatives, a mix consisting of remaining user-level data and the limited data available from the SKAdNetwork should be applied. With all the limitations it should still be possible to get some basic estimations regarding optimizing e.g. text ads and creatives. This obviously depends on what additional data Apple will make available and whether it will relax the current restriction to only 100 different campaigns.

Overall marketing managers need to be adaptive and continuously follow the latest developments regarding restrictions and regulations impacting measurement and optimization. MMM is based on aggregated data, which will always be available and therefore constitutes a solid and robust base for mobile marketing measurement and optimization systems.